Sense and polarity, or why meaning can drive language change

Generally a sentence can be negative or positive depending on what one actually wants to express. Thus if I’m asked whether I think that John’s new hobby – say climbing – is a good idea, I can say It’s not a good idea; conversely, if I do think it is a good idea, I can remove the negation not to make the sentence positive and say It’s a good idea. Both sentences are perfectly acceptable in this context.

From such an example, we might therefore conclude that any sentence can be made positive by removing the relevant negative word – most often not – from the sentence. But if that is the case, why is the non-negative response I like it one bit not acceptable, odd when its negative counterpart I don’t like it one bit is perfectly acceptable and natural?

This contrast has to do with the expression one bit: notice that if it is removed, then both negative and positive responses are perfectly fine: I could respond I don’t like it or, if I do like it, I (do) like it.

It seems that there is something special about the phrase one bit: it wants to be in a negative sentence. But why? It turns out that this question is a very big puzzle, not only for English grammar but for the grammar of most (all?) languages. For instance in French, the expression bouger/lever le petit doigt `lift a finger’ must appear in a negative sentence. Thus if I know that John wanted to help with your house move and I ask you how it went, you could say Il n’a pas levé le petit doigt `lit. He didn’t lift the small finger’ if he didn’t help at all, but I could not say Il a levé le petit doigt lit. ‘He lifted the small finger’ even if he did help to some extent.

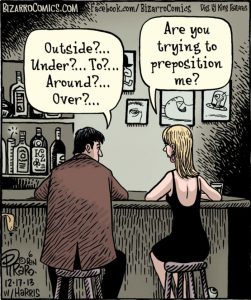

Expressions like lever le petit doigt `lift a finger’, one bit, care/give a damn, own a red cent are said to be polarity sensitive: they only really make sense if used in negative sentences. But this in itself is not the most interesting property.

What is much more interesting is why they have this property. There is a lot of research on this question in theoretical linguistics. The proposals are quite technical but they all start from the observation that most expressions that need to be in a negative context to be acceptable are expressions of minimal degrees and measures. For instance, a finger or le petit doigt `the small finger’ is the smallest body part one can lift to do something, a drop (in the expression I didn’t drink a drop of vodka yesterday) is the smallest observable quantity of vodka, etc.

Regine Eckardt, who has worked on this topic, formulates the following intuition: ‘speakers know that in the context of drinking, an event of drinking a drop can never occur on its own – even though a lot of drops usually will be consumed after a drinking of some larger quantity.’ (Eckardt 2006, p. 158). However the intuition goes, the occurrence of this expression in a negative sentence is acceptable because it denies the existence of events that consist of just drinking one drop.

What this means is that if Mary drank a small glass of vodka yesterday, although it is technically true to say She drank a drop of vodka (since the glass contains many drops) it would not be very informative, certainly not as informative as saying the equally true She drank a glass of vodka.

However imagine now that Mary didn’t drink any alcohol at all yesterday. In this context, I would be telling the truth if I said either one of the following sentences: Mary didn’t drink a glass of vodka or Mary didn’t drink a drop of vodka. But now it is much more informative to say the latter. To see this consider the following: saying Mary didn’t drink a glass of vodka could describe a situation in which Mary didn’t drink a glass of vodka yesterday but she still drank some vodka, maybe just a spoonful. If however I say Mary didn’t drink a drop of vodka then this can only describe a situation where Mary didn’t drink a glass or even a little bit of vodka. In other words, saying Mary didn’t drink a drop of vodka yesterday is more informative than saying Mary didn’t drink a glass of vodka yesterday because the former sentence describes a very precise situation whereas the latter is a lot less specific as to what it describes (i.e. it could be uttered in a situation in which Mary drank a spoonful of vodka or maybe a cocktail that contains 2ml of vodka, etc)

By using expressions of minimal degrees/measures in negative environments, the sentences become a lot more informative. This, it seems, is part of the reason why languages like English have changed such that these words are now only usable in negative sentences.

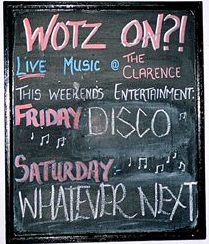

These examples look like ‘eye dialect’: the use of nonstandard spellings that correspond to a standard pronunciation, and so seem ‘dialecty’ to the eye but not the ear. This is often seen in news headlines, like the Sun newspaper’s famous proclamation “it’s the Sun wot won it!” announcing the surprise victory of the conservatives in the 1992 general election. But what about sentences like the following from the

These examples look like ‘eye dialect’: the use of nonstandard spellings that correspond to a standard pronunciation, and so seem ‘dialecty’ to the eye but not the ear. This is often seen in news headlines, like the Sun newspaper’s famous proclamation “it’s the Sun wot won it!” announcing the surprise victory of the conservatives in the 1992 general election. But what about sentences like the following from the